- Interaction design

- Sound design

- Coding

- Machine learning

Machine learning technologies are frequently used in visual design software and applications. However, there are far fewer examples of these technologies being used for audio applications and instrument design.

The ml.cubes prototype is meant to demonstrate how this tech might allow for new forms of musical interaction, expression and playing styles.

I was inspired to begin this project after taking an online course which teaches how to use machine learning technologies for the purpose of building real-time controllers for music, games and interactive art.

My main personal goal for this project was to learn through making and experimentation. I had no particular design outcomes which I wanted to achieve, but was inspired by the materials and technology that I was working with.

A couple of curiosities I had while beginning this project:

- What unconventional musical interactions can be designed using machine learning?

- How might machine learning tech enable the rapid prototyping of instrument designs?

- What do users expect while interacting with musical instruments and devices, and how might physical material selection, form and appearance influence this?

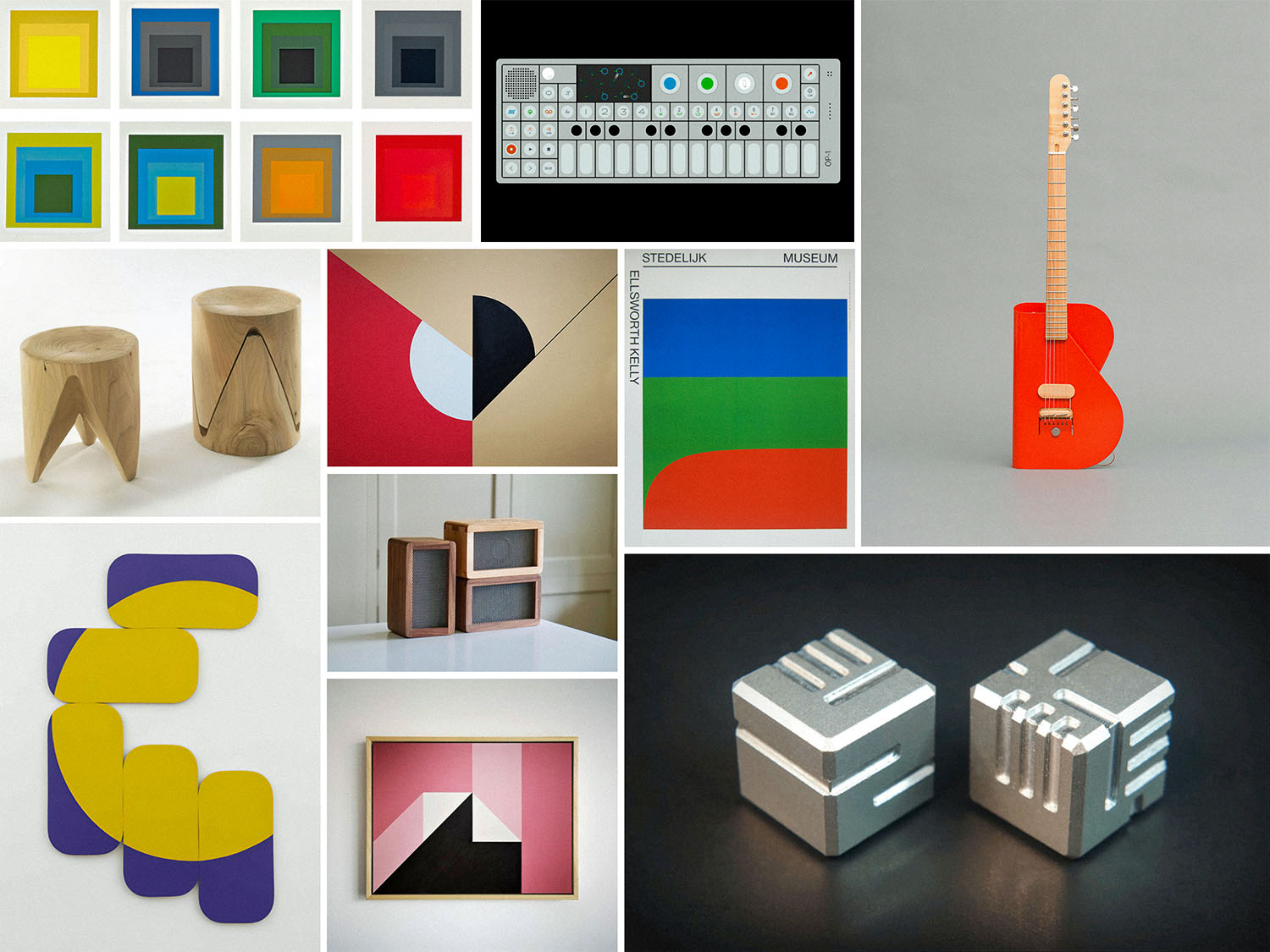

I created a moodboard to establish the tone that I wanted to set with my instrument design. I came up with three themes which I wanted this product to express:

- simple and natural

- abstract, experimental and expressive

- modern, but timeless

The final design uses wooden cube enclosures painted in a hard-edge style. The cubes don't have any controls, switches or lights and simply start to play sound the moment in which they are moved.

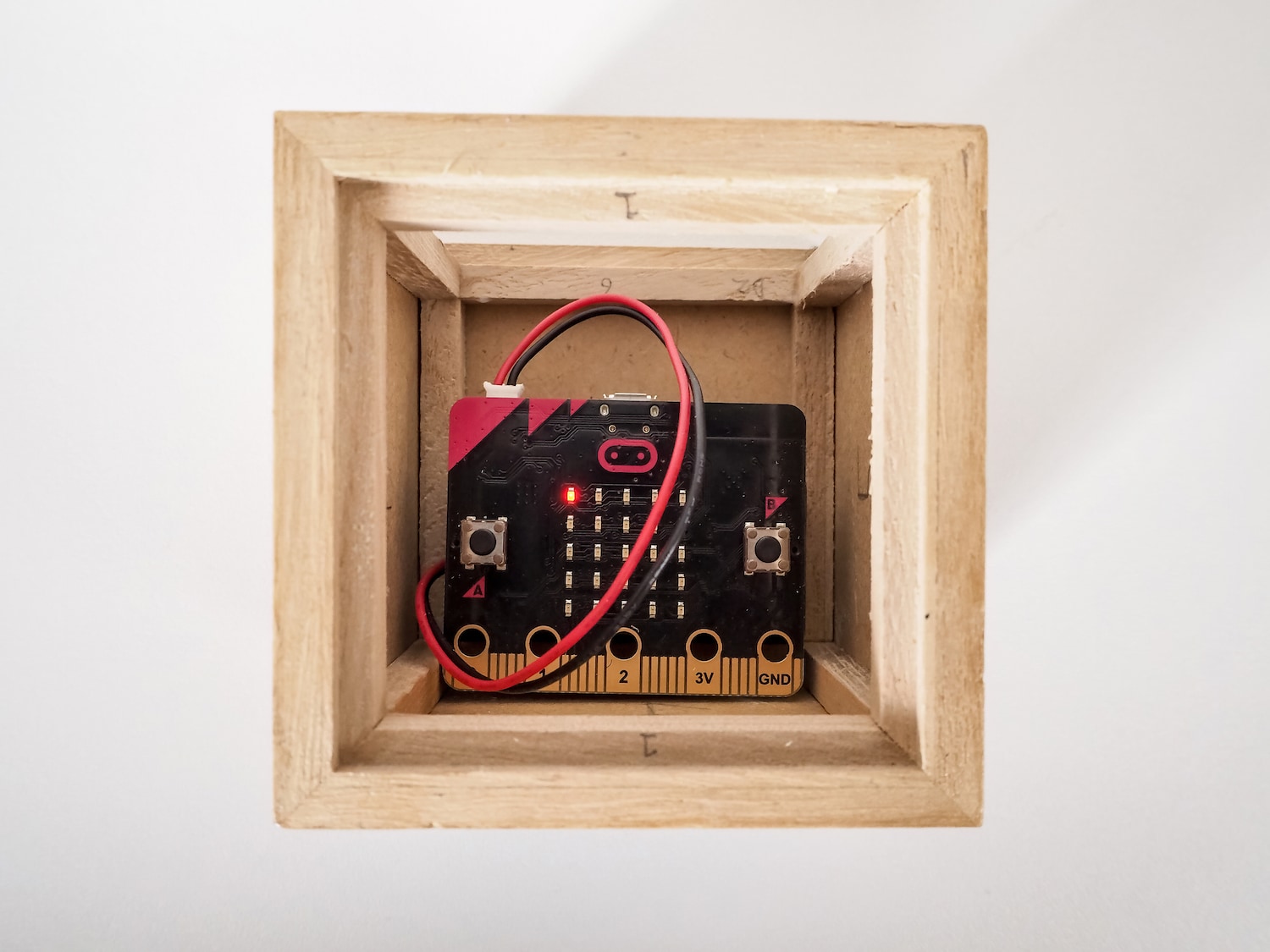

The ml.cubes send wireless accelerometer data via a Bluetooth connection to the ml.cubes program. This data is fed to an SVM machine learning model to detect cube face positions. Each unique cube position combination triggers audio effect changes. The wireless design enables the cubes to be easily positioned on top of a table, piano, guitar amplifier, or wherever else feels appropriate during a live performance.

I designed physically modelled software instruments to achieve realistic sounds. I also created a simple graphical user interface to signal which position either cube is currently in.

Technologies I used for this project include:

- MicroBit wireless accelerometers

- Ml.lib SVM machine learning library

- IRCAM's Modalys

- Cycling '74 Max software

My biggest takeaway from this project was how personal experimentation and interaction with physical materials and tech can lead to more unconventional product designs. For example, the more I experimented with the wooden cubes the more I understood their physical affordances.

The cube shapes invite users to turn and flip the controllers and I used these natural movements and transitions to design intuitive interactions. The number of combinations that are possible with the design suggest to the user that experimentation is the best way forward.